Verification and validation: why they matter when creating a test case

When creating a good test plan, verification and validation are a given. After all, V&V is the process through which a company proves their product works.

Successful test case verification needs powerful test management solutions. Functionize uses machine learning to deliver peerless test management.

When creating a good test plan, verification and validation (V&V) are, of course, a given. After all, V&V is the process through which a company proves their product actually works. Users are not very likely to pick up a product that has not undergone these types of testing. And who can blame them? If a product cannot prove it does what it promises, what motivation could there possibly be to use it?

Therefore, the importance of verifications in test cases literally cannot be overstated. For a software tool to be truly viable, verifications must be done; it’s as simple (and complicated) as that. Simple, because it has to be done. Complicated (as we will see), because verification, validation, test cases, and test scenarios all go into the test planning “bucket.”

Unpacking what each of these terms mean can be tricky. Not only that, we must also understand why verification must come first. This is particularly true for testers that may lack extensive coding experience. To that end, let’s clear the air a little by taking a deeper look at verifications of test cases.

Verification vs Validation

Verification and validation are joined at the hip. Indeed, it’s a rare occurrence to see one mentioned without the other. This means that from the outside looking in, both customers and DevOps might not know the difference. What’s more, they may assume these stages of testing happen concurrently. The understanding that verification always comes first can’t be taken for granted. Below we shall define these terms for our purposes; more in-depth definitions can be read at https://en.wikipedia.org/wiki/Software_verification_and_validation.

Counterintuitively to many, verification does not test whether or not a product works. It does, however, test whether or not a product works. Confusing, right? Well, verification checks to see if the software outputs the right things. Meaning, testing to see if all the parts and pieces talk to each other the right way. Followed by testing to see if the combination of parts and pieces output the right type of information. Verification testing is requirements testing: does the product provide the desired features?

Moving on, validation is the second step of testing. Validation testing is what many think of as “real testing.” That is to say, validation checks to see if the software outputs correct things. Validation testing proves that the software outputs are factually correct. But validation can’t take place without verification. We can’t know if the outputs are true if we don’t know the outputs are right.

To put it in other words, verification is making sure outputs are the right things; validation is making sure the outputs are right. Production can go no further until verification is complete.

Test Case vs Test Scenario

So, now we know what verification and validation mean. Specifically, we know what verification testing is and why it always comes first. Moving on, let’s look at how to verify test cases. In particular, let’s look at test management.

What is a test scenario?

A test plan consists of two parts: test cases and test scenarios. It all starts with test scenarios. A test scenario is a broad, often vague, problem statement. For example, a test scenario might be:

“Open a website, input a username and id, and test for success / failure.”

Test scenarios create the overall test plan for testing all of a product’s features. Test scenarios are like design documents, say a picture of a Lego castle. They tell testers the ultimate goal of testing.

So, what is a test case?

On the other hand, test cases are the unit tests that take place during each test scenario. They are like the individual Legos. They test each tiny part and piece of the code behind the test scenario. The above example test scenario would have test cases like:

Input username “exampleuser”; Input password “password”; Check for success; Input username “incorrectuser”; Input password “password”; Check for failure

A very good walkthrough of everything it takes to go from scenario to case is https://www.guru99.com/test-case.html.

This test case runs for each possible combination of usernames, passwords, success, and failure results. As this simple case highlights, testing even a basic feature can require a large volume of test cases. This is especially true for verification testing. Validation tests have to test each feature a user sees. Verifications, though? A much vaster beast to tackle.

Not only do the test cases have to pass, each individual step of each test case must be verified. Does the input field accept text characters? How many? Does capitalization matter? What happens if the user enters a number? Does the right error message show? Every feature must be validated; every line of code must be verified.

All this goes to show, verification testing is beyond impossible without test management tools. To have any chance at success, testers have to have some tools for wrangling the volume of tests and even greater volume of data. Which brings us to our next topic: what, exactly, does this tool look like?

Test management tools

Without a doubt, verification testing is the most important and arguably the most labor-intensive step in software testing. Think about how many test cases came out of our simple example. Now, think about testing all the code behind the test cases. Ironically, testing code takes even more code. Something has to keep it from becoming turtles all the way down.

The biggest challenges facing verification testers include:

- Breaking down test scenarios into test cases.

- Writing scripts for each test case verification.

- Monitoring scripts for errors and/or updates.

- Storing, manipulating, and visualizing data.

The requirements for a test management tool

In light of these challenges, it stands to reason that a good test management tool has a few “must-have” features. First, it needs to have some ability to turn test scenarios into test cases. Second, it needs to have the ability to execute these test cases. Third, it needs some way to replay tests. And fourth, it needs to have a viable way to store verification data. More succinctly, test management tools must:

- Shorten test case development time.

- Take the scripting out of testing.

- Automate massive test volumes and variations.

- Ensure test accuracy across product versions.

- Provide accessible data logs, storage, and playback.

Machine learning for test management

There are many options when it comes to test management software. Unfortunately, there are also many shortcomings in traditional test management tools. We’ve learned the unique challenges in both executing and analyzing verification tests data. However, one challenge in particular for management tools stands out: test automation.

That’s not to say this limitation is intentional – verification testing is extraordinarily hard to automate. It’s clear to the testing community by now that effectively, testing without automation, effective testing is just a pure no-go. So what makes verifications so special?

Well, as we’ve shown above, verification takes a lot of tests. Like, a lot a lot. Even worse, these tests need test scripts. Test scripts need human authors. Test scripts often have to change when features change. Moreover, test scripts are brittle, and tracking down broken scripts is a Sisyphean task, even on a good day. Put simply, test automation needs 1. A way to generate scripts on the fly and 2. A way to self-heal broken tests. This is why we turn to machine learning.

How machine learning helps us

Fortunately, machine learning can now accomplish these two vital tasks. Two machine learning technologies make verification testing “automatable.”

The first is Natural Language Processing (NLP). NLP is the process through which plain human English is translated into code a computer can understand. NLP lets test managers turn their vague test scenarios over the test management platform and let it create the test cases, and thereby test scripts, for them. Even better, NLP lets team members that don’t necessarily know how to code contribute to the test plan.

The second is Dynamic Learning. Or as a snazzier term, Self-Healing. Using machine learning, testing software can learn as it tests. Over time, the test management tool learns about its job, gets smarter at creating test cases, and most importantly, recognizes when tests break. A simple feature update has the potential to invalidate an entire set of test cases. Cue a human wasting time finding the broken tests and repairing or removing them. However, a self-healing tool sees the break coming and either fixes it or lets its human know exactly where to intervene.

Bringing it all together

Now we have a nice, clear picture of all the testing pieces. This friendly picture shows us what verification testing is, how to do it, and what tools are necessary. Finally, we can definitively state that verification testing demands a testing platform capable of:

- Bringing test scenarios together.

- Translating test scenarios into test cases.

- Automating test cases with self-healing.

- Storing, replaying, and visualizing test data.

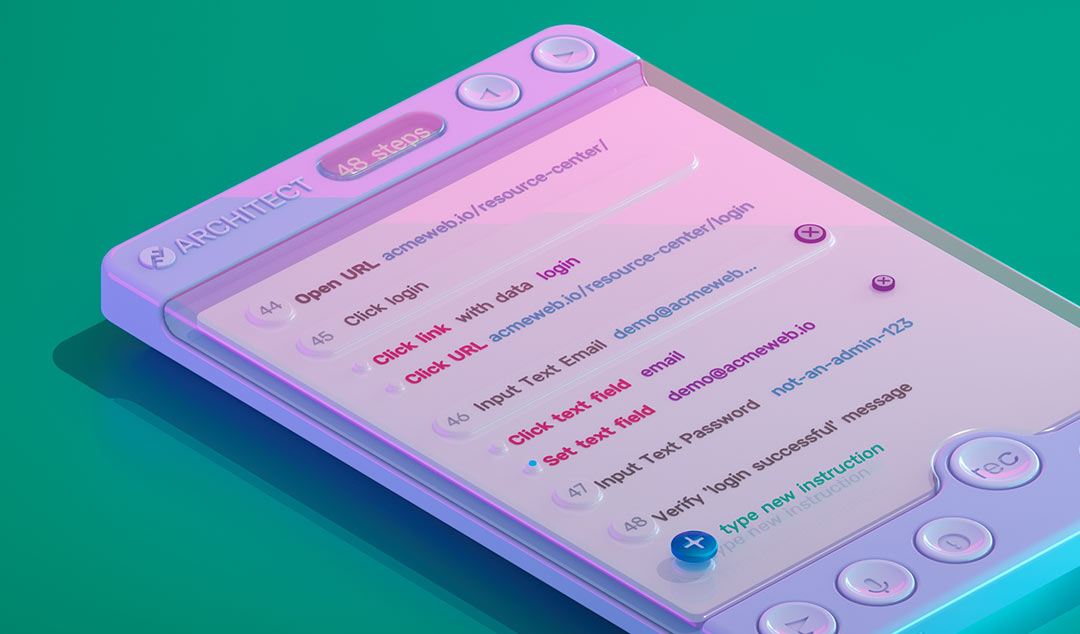

Functionize Architect and NLP test generation use the machine learning discussed above to create this exact test management platform. They build on a strong foundation of machine learning, which empowers the Funtionize test platform to provide all of the “must-have” features of a test management tool. From start to finish, the Functionize test platform walks you through the entire verification process:

- First, ALP uses the power of NLP to quickly turn vague test scenarios into detailed test cases. That is to say, ALP removes much of the tedious, error-prone script writing stage of verification.

- Second, Architect automates verification testing the right way using dynamic learning. Architect learns from verification tests in real-time. Accordingly, it will find and resolve testing errors much faster than a human tester ever.

- Third, Architect is a next-generation test recorder that does much more than record tests. It provides user-friendly tools to store, organize, replay, and visualize the massive volume of data generated during verification.

Machine learning and Functionize together let you cruise through the murky waters of verification. Functionize powers you through the grind of verification and get your customers trusting you right out of the gate. For a demo of Architect, visit https://www.functionize.com/demo/ today.