5 rules for successful test automation

The key to a successful test automation strategy is to set expectations correctly: recognize what the software can do and be equally aware of what it can't do.

The key to successful use of test-automation tools is to correctly calibrate expectations: Recognize what the software can do and be equally aware of what it cannot do. That helps you make useful decisions in the most important areas: what can and should be automated, how it should be done, and who should do it.

The purpose of automated testing is to help people work smarter. Use test automation to take the development effort where humans can’t go, and amplify the testing of good testers.

Despite the field’s established history, some CIOs and managers expect test automation to fully automate all product testing, magically create 100% code coverage, and catch all bugs. Not to mention a secret belief that they can fire all the talented people and hire a chimp.

1. Automation reflects a process; it doesn’t replace it

Don’t confuse test automation with vendor tools. However good they are – and we do like to think that Functionize exemplifies the best possible options! – any tool can only help you improve a process. Think of a kitchen blender, which is a more efficient way to combine ingredients than is a mortar and pestle. Or consider carpentry: Power tools help a carpenter build a house faster than with hand tools, but she doesn’t just sit back and watch the tools build the house by themselves.

Rather, approach test automation as a way to test applications for conformance to the QA criteria by which you judge software as ready to release. The tools allow you to submit input, capture output, and compare it against a baseline. In other words, test automation tools merely automate a process you already (we hope) have in place.

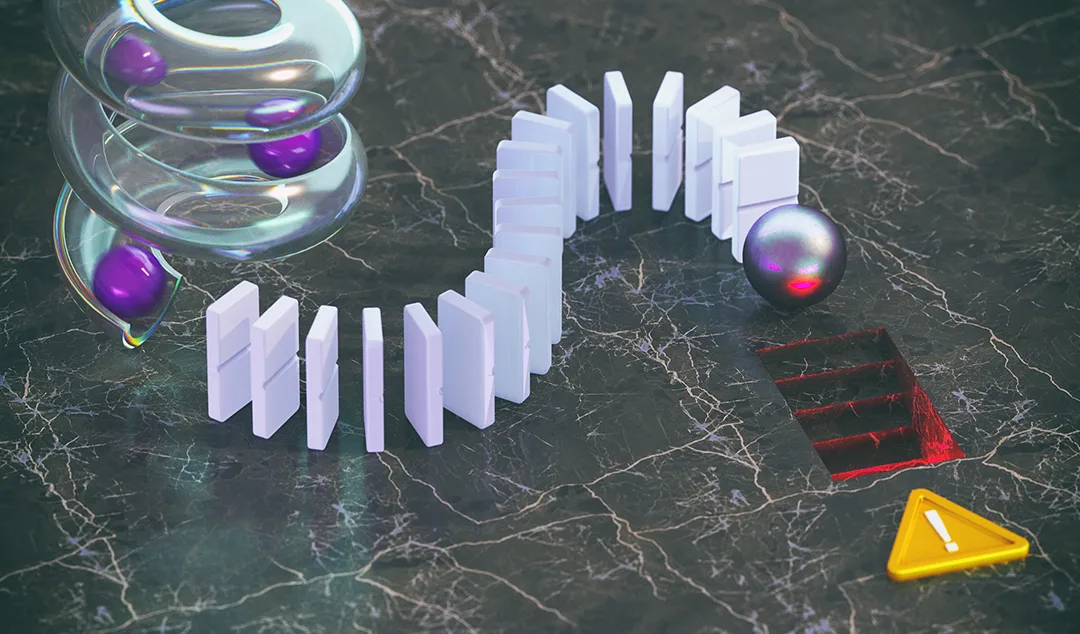

That’s the danger and the opportunity. Automating a good process shows how good that process is. Automating a bad process amplifies its weaknesses.

2. It’s another development project

Treat the test-automation project like any other development project. That means putting together specifications on what it will and will not do, how the code/modules are designed, and so on.

The automation needs to be planned, managed, monitored and supported just like a real application development project. It’s just another kind of product development, and thus beholden to the same rules and processes as any other type of software development project.

3. Automate the right things

Test automation is an ideal solution in several situations, but don’t force these tools into the wrong role. Among the positive points: It is a good way to get repeatable tests, to expand application coverage, to speed up tests on subsequent versions of the application, and to do regression testing.

But it is automation, not automagic. Don’t expect to automate everything. Some tasks inherently require manual testing.

The testing part still belongs to the testers. Those professionals are responsible for coming up with appropriate input to exercise the application, working out whether the output is both correct and according to spec, and looking for bugs.

4. Get the right people

Apparently, many managers assume that because the automation tools do all the work, an intern can run the software. This attitude gets professional testers’ shorts tied in a knot.

“Get the right staff,” one tester insisted. “This type of work requires the right type of skills and knowledge. Testers need to know how to write code, how the application under test is built, and how to do testing.”

For any nontrivial test-automation project, you need dedicated personnel. As long as the developers have to split their time between automated and manual testing responsibilities, the test-automation effort never really gets up and going in a truly successful manner.

5. Design applications to be tested

Automated or no, QA tests don’t come out of nowhere. Programmers should create their applications with testability in mind, or at least with clear communication among the business subject-matter experts, the development staff, and the QA team.

One example of the design-for-test approach is for QA staff to set conventions that developers follow. For example, get developers to always guarantee a unique way of identifying an object. It can be its associated text, a title, a unique ID, etc. Once you have this standard set, see if the test tool has options for setting the order in which it picks an identifiers.

Otherwise, you can slow down the testing process. “The main difficulty I faced was the inconsistency of the different warning or error messages the product was returning to the user,” a tester told me. “The same message window was used either for a warning or a error, but from the automation point of view it was really hard to figure out if you should list the run as successful or as a failure,” she added.

The promise of continuous testing is faster delivery of higher quality software. Our ML engine page explains how it works.